Reliable data pipelines made easy

Simplify batch and streaming ETL with automated reliability and built-in data quality.

TOP TEAMS SUCCEED WITH INTELLIGENT DATA PIPELINESData pipeline best practices, codified

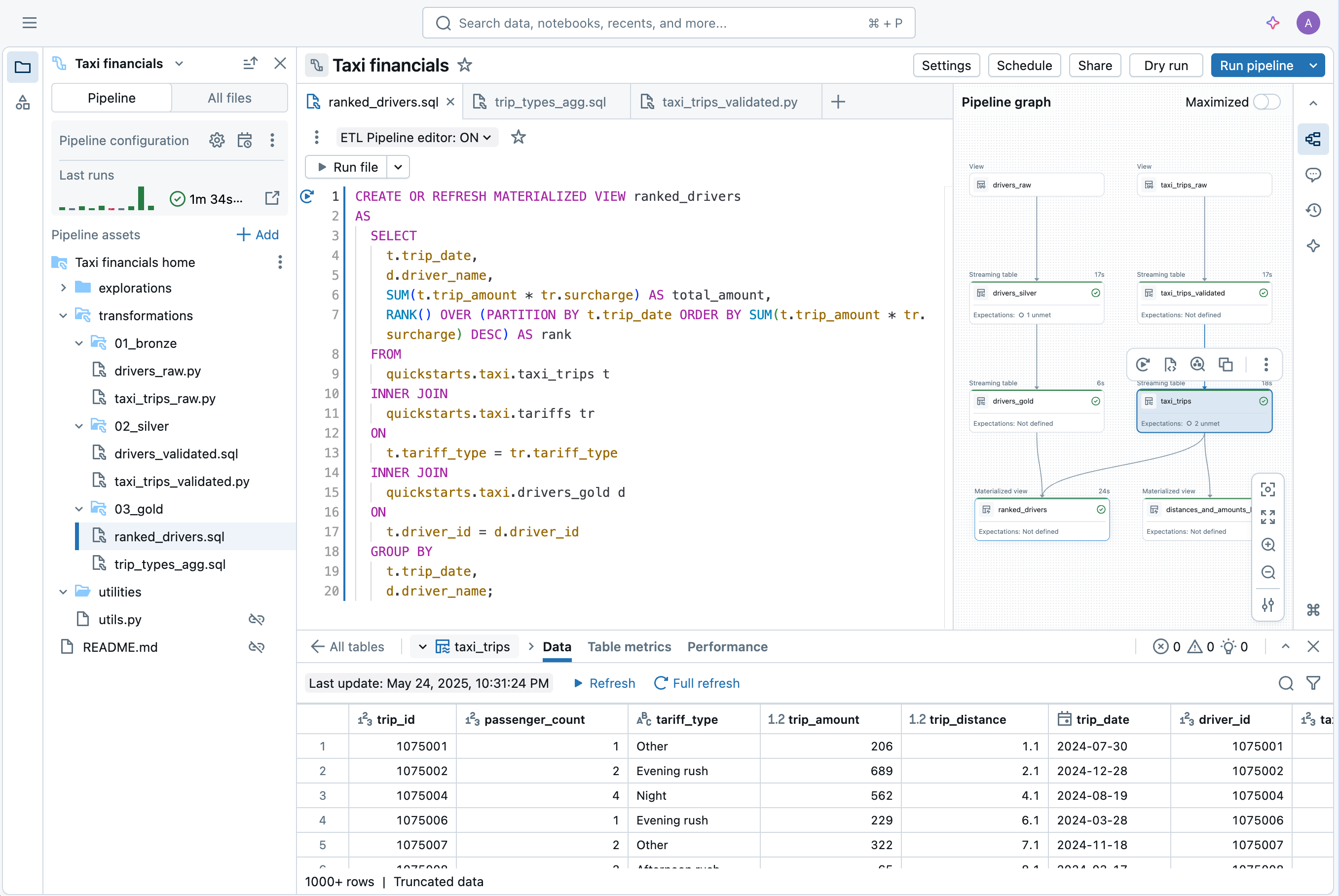

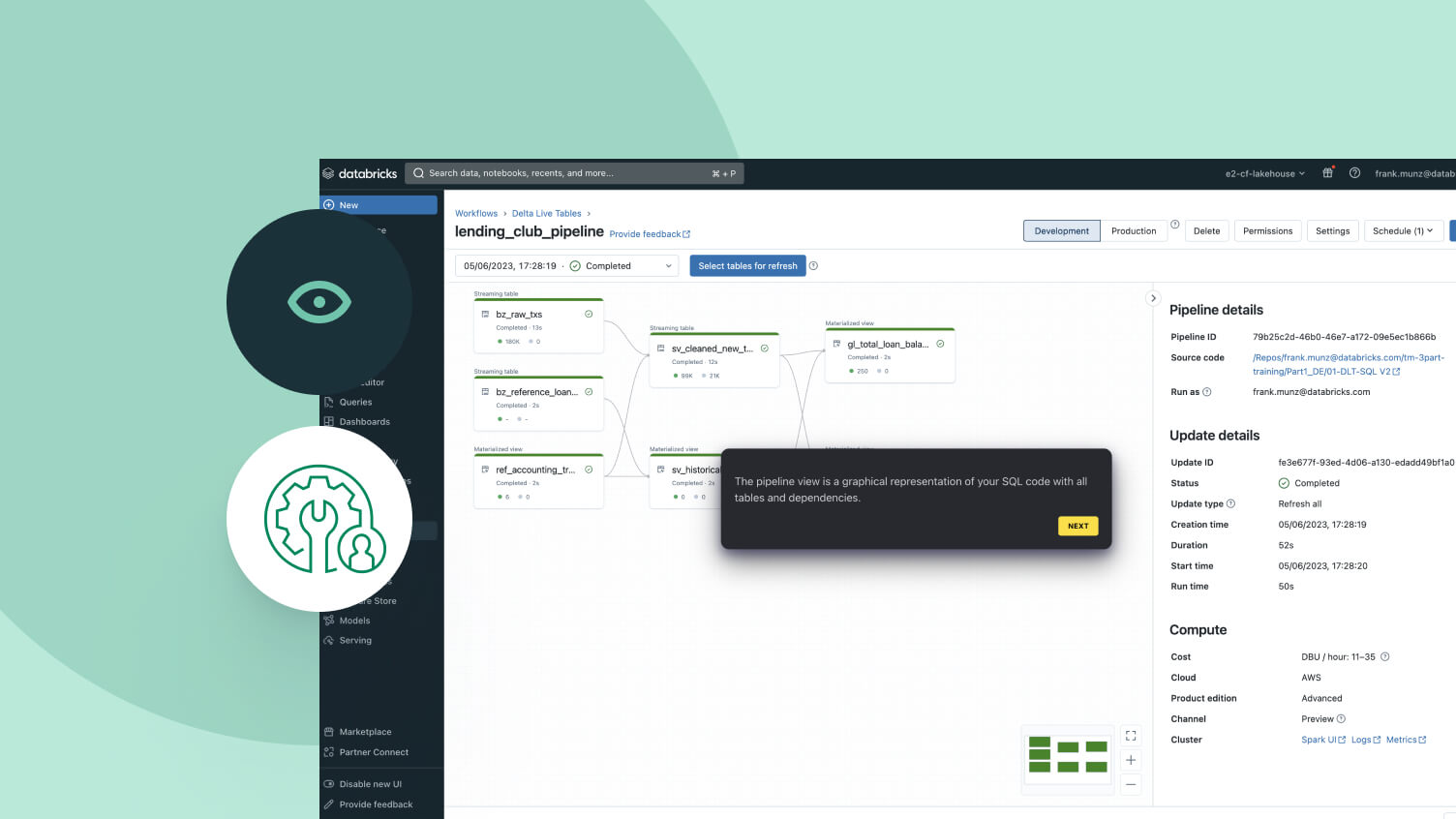

Simply declare the data transformations you need — let Spark Declarative Pipelines handle the rest.Built to simplify data pipelining

Building and operating data pipelines can be hard — but it doesn’t have to be. Spark Declarative Pipelines is built for powerful simplicity, so you can perform robust ETL with just a few lines of code.Leveraging Spark’s unified API for batch and stream processing, Spark Declarative Pipelines allows you to easily toggle between processing modes.

More features

Streamline your data pipelines

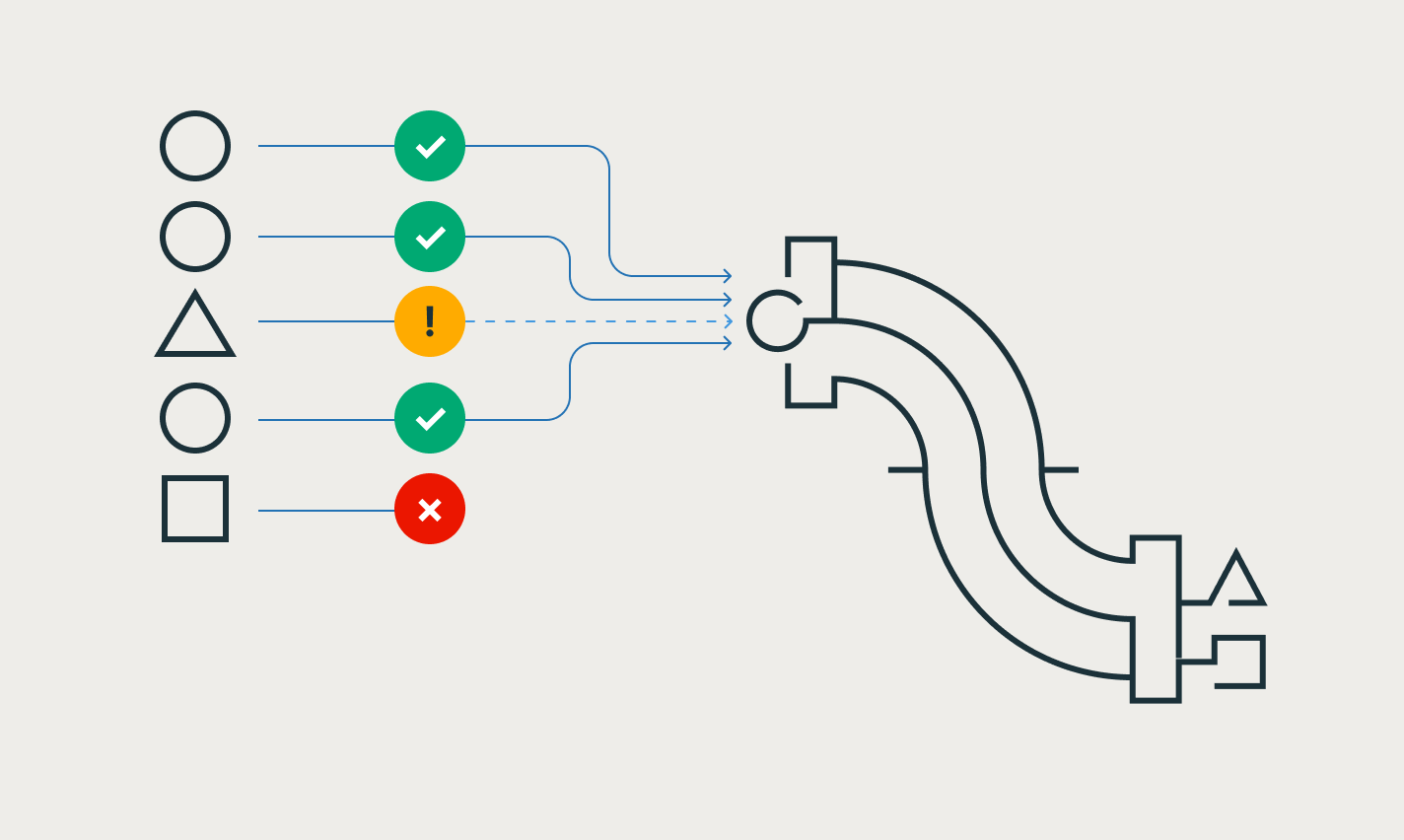

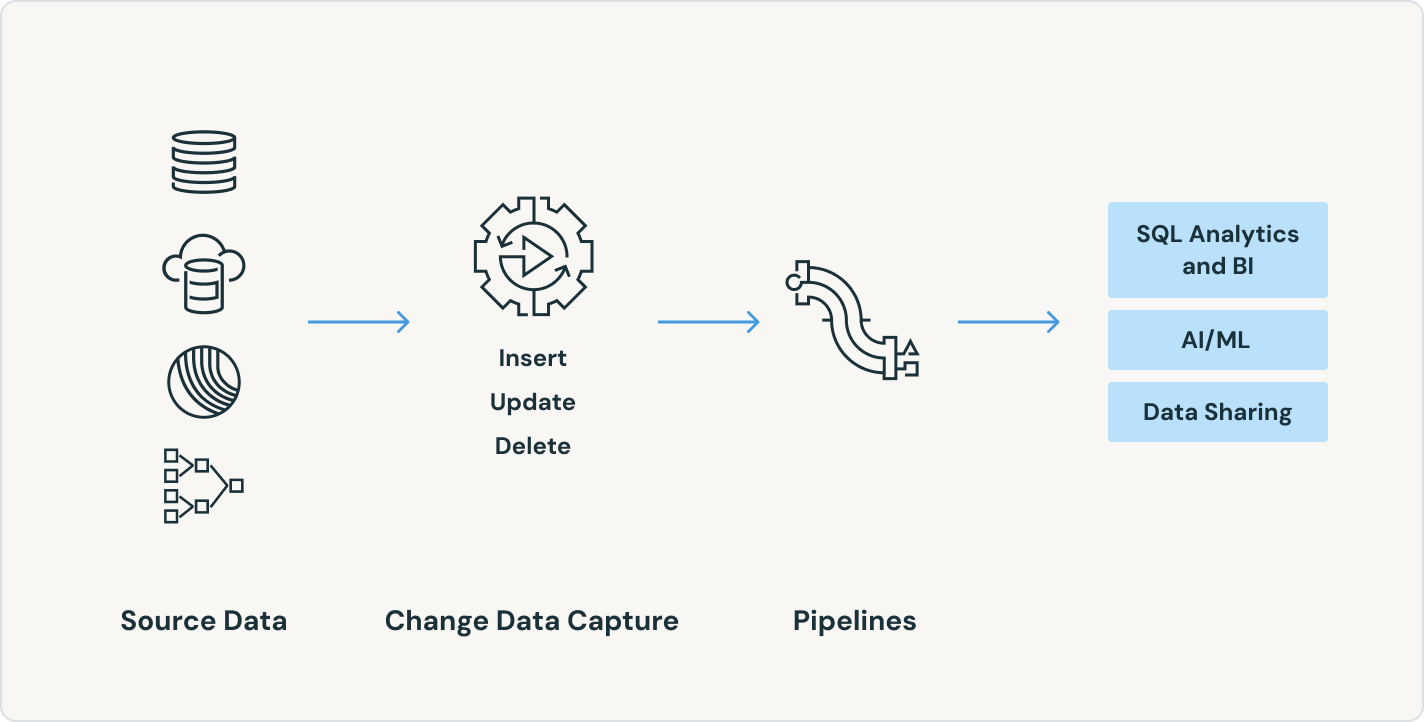

Easily ensure data integrity and consistency

Simplify change data capture with the APPLY CHANGES APIs for change data feeds and database snapshots. Spark Declarative Pipelines automatically handles out-of-sequence records for SCD Type 1 and 2, simplifying the hardest parts of CDC.

Unlock powerful real-time use cases without extra tooling

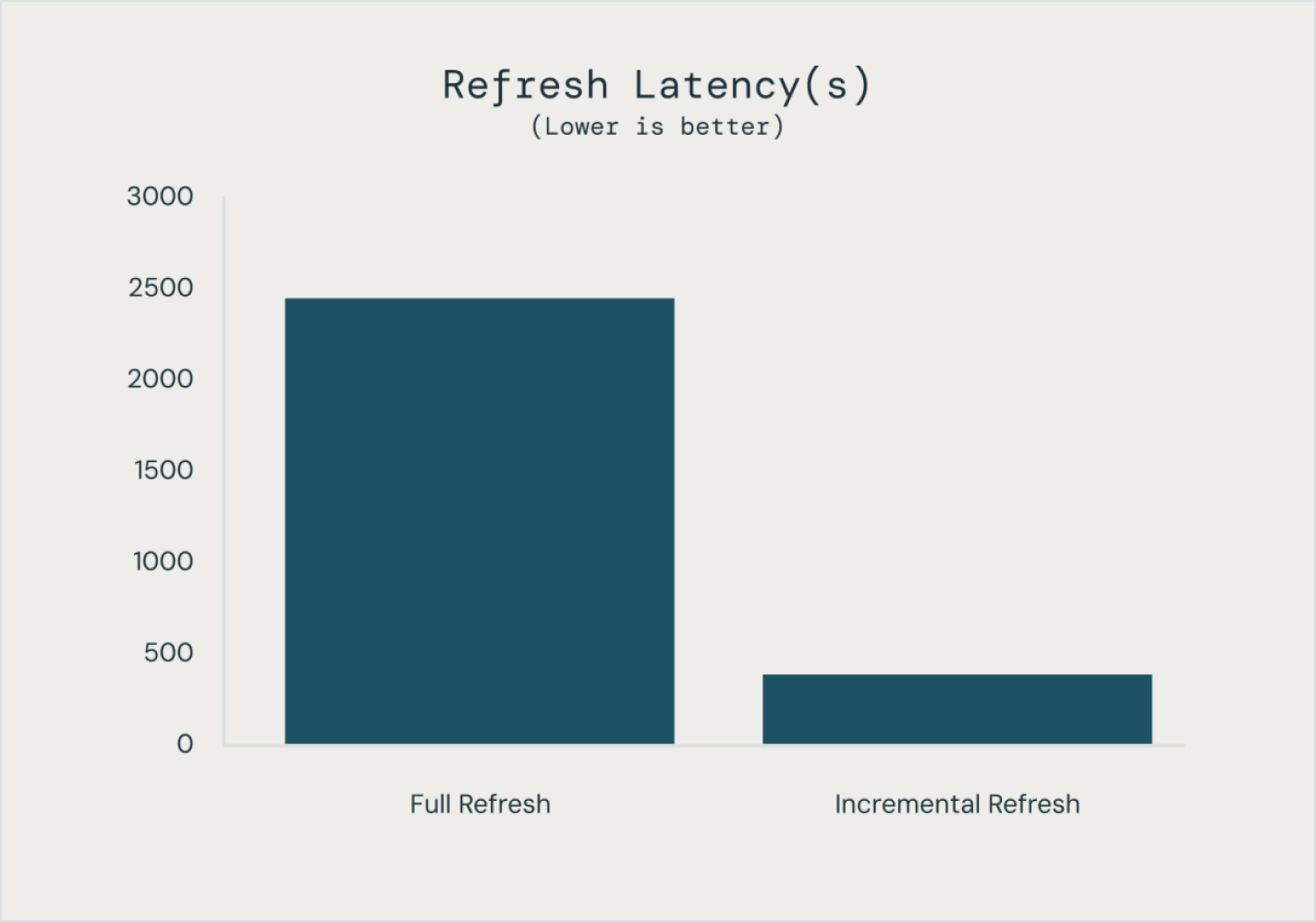

Build and run your batch and streaming data pipelines in one place with controllable and automated refresh settings, saving time and reducing operational complexity. Operationalize streaming data to immediately improve the accuracy and actionability of your analytics and AI.

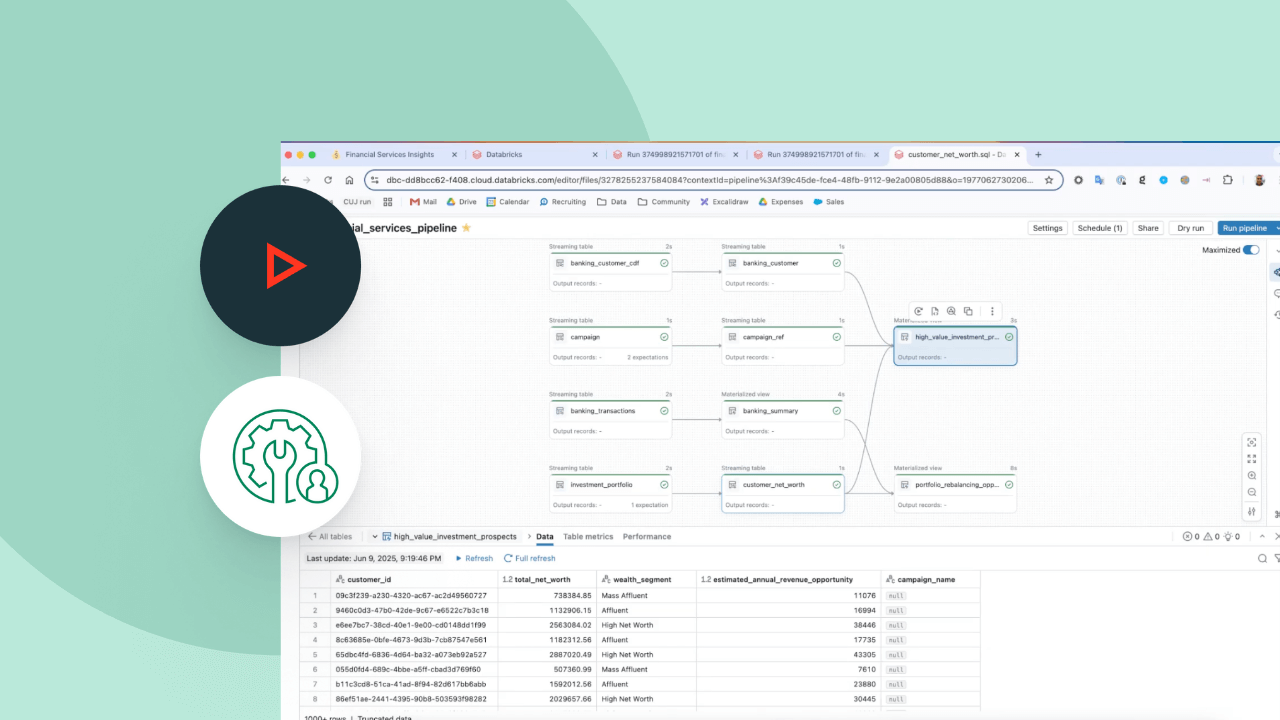

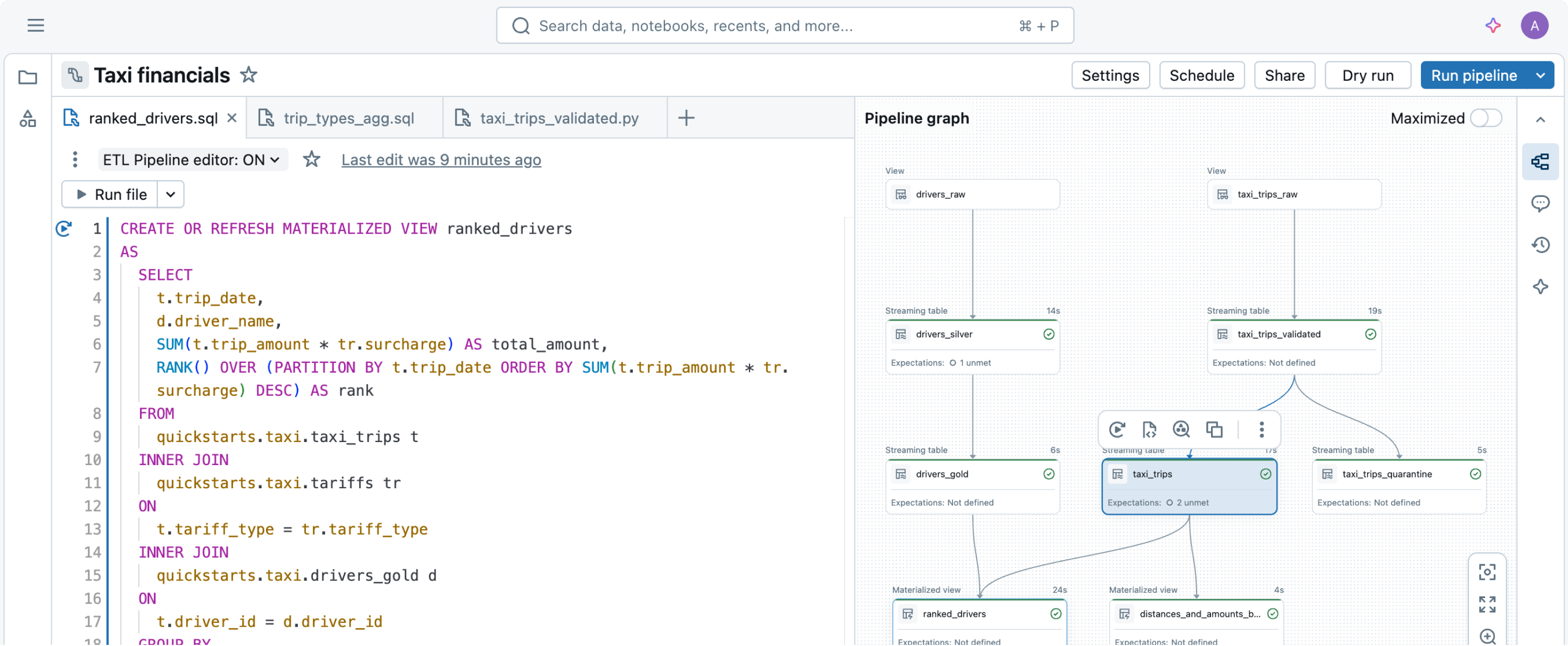

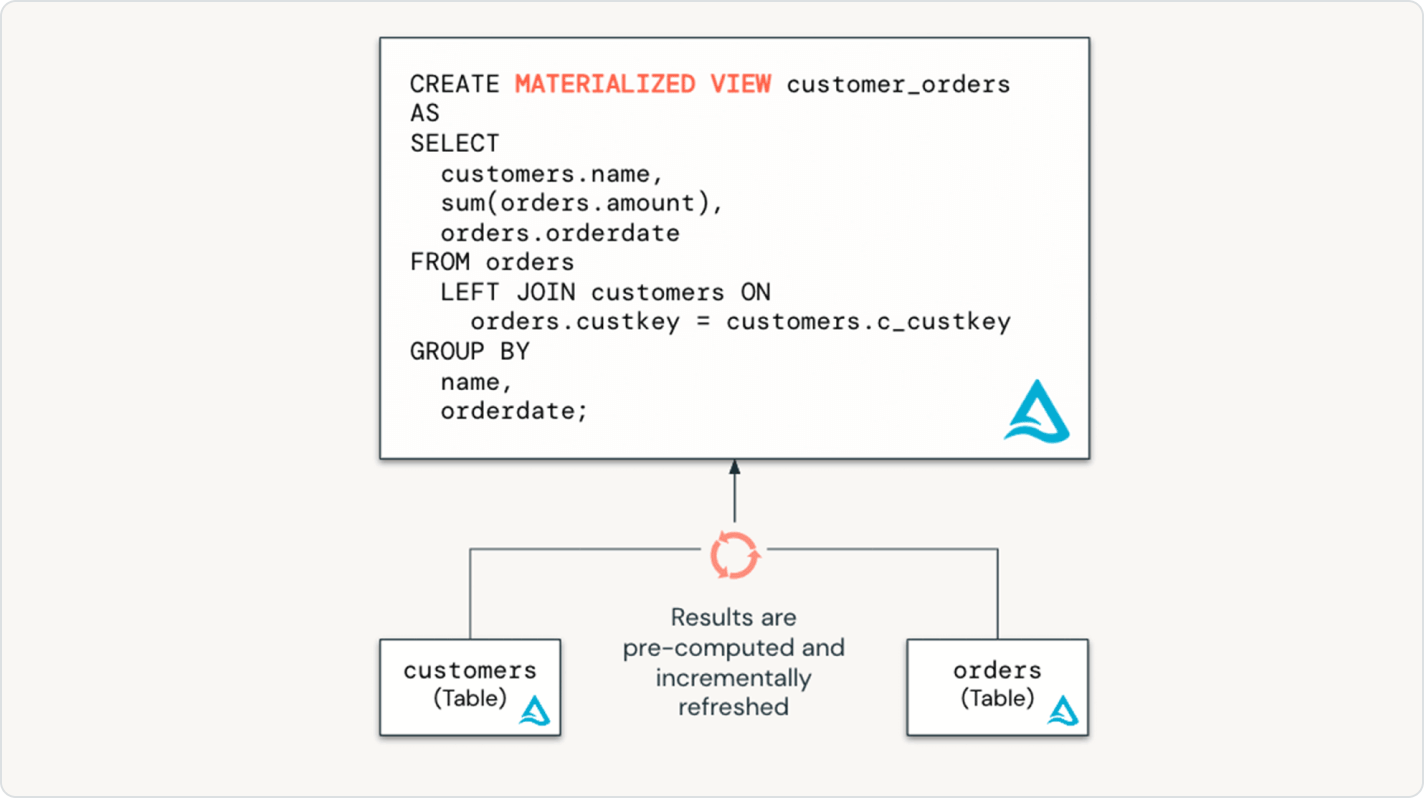

Seamlessly bring data engineering best practices to the world of data warehousing

With Spark Declarative Pipelines, data warehouse users have the full power of declarative ETL via an accessible SQL interface. Empower your SQL analysts with low-code, infrastructure-free data pipelines, unlocking fresh data for the business with minimal setup or dependencies.

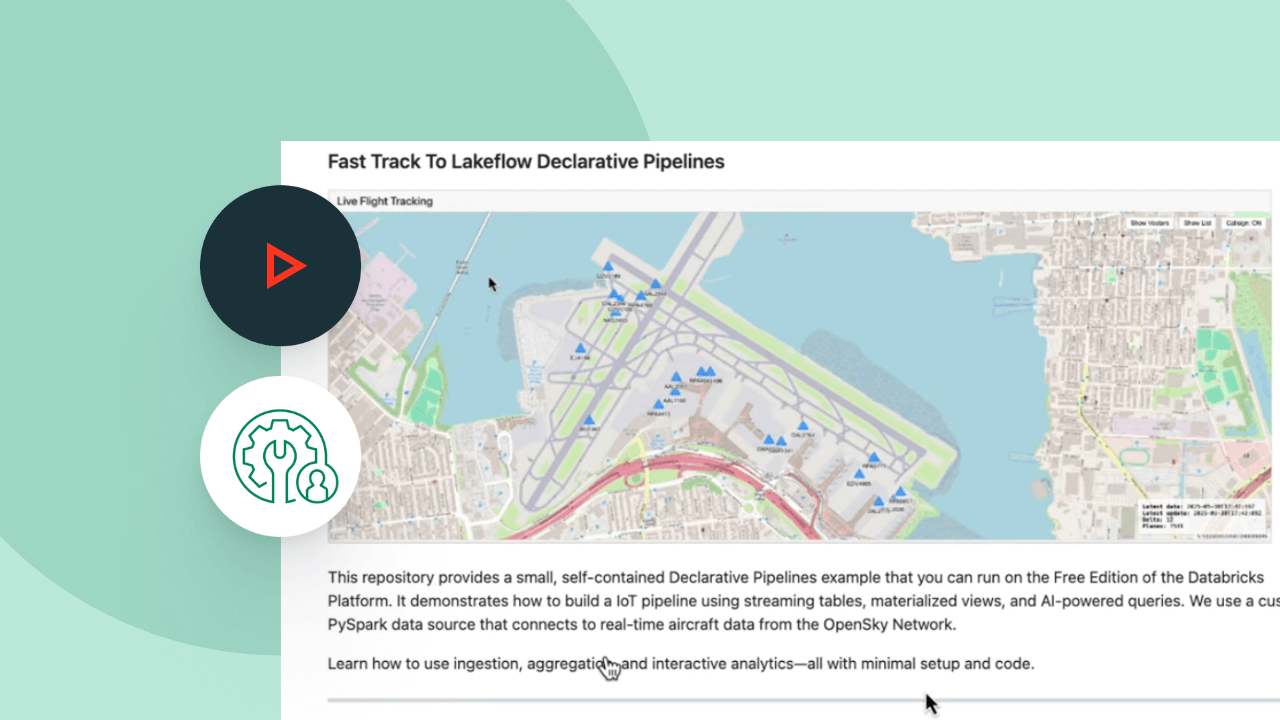

Explore Spark Declarative Pipelines demos

Usage-based pricing keeps spending in check

Only pay for the products you use at per-second granularity.Discover more

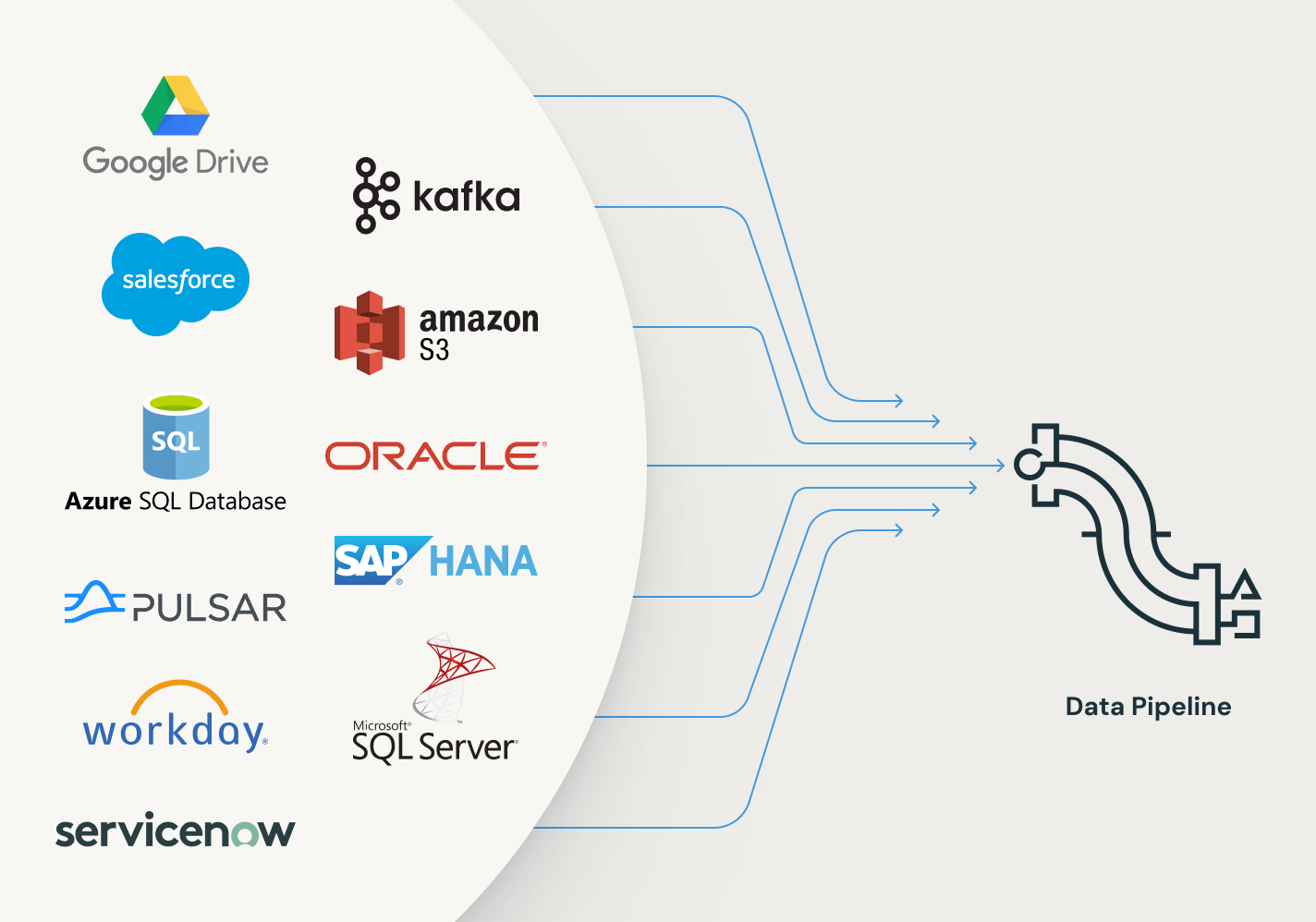

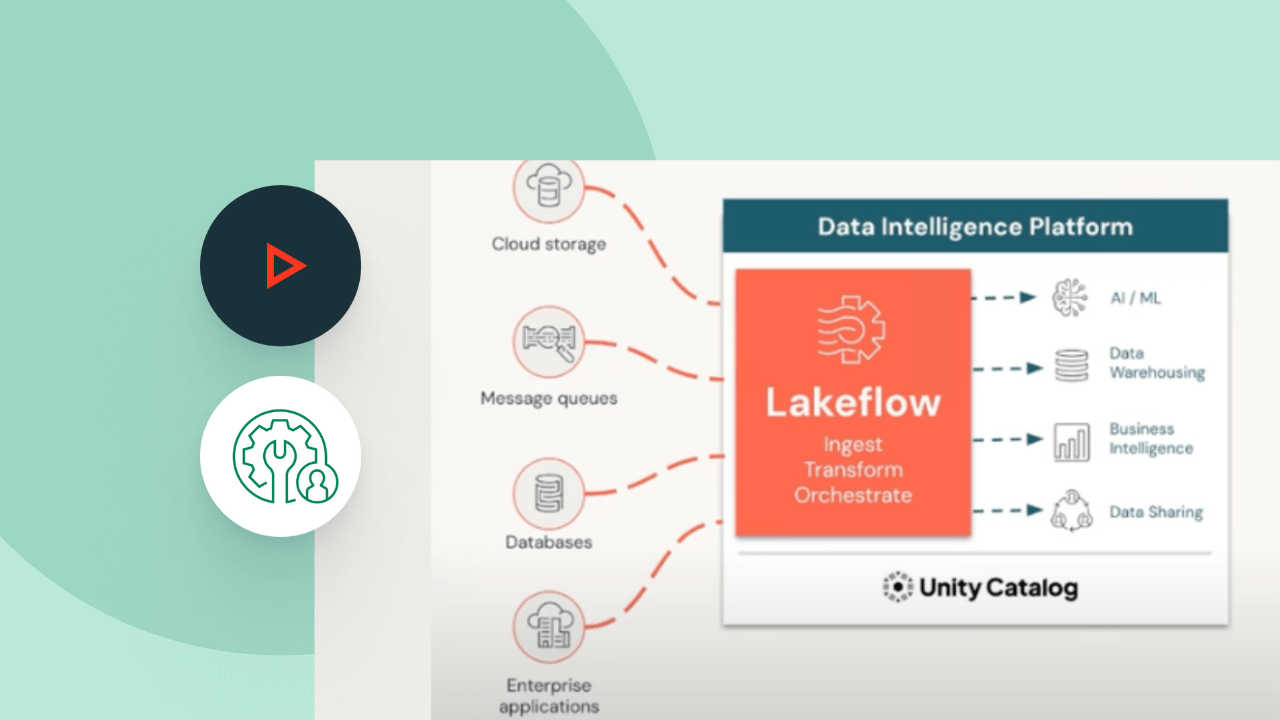

Explore other integrated, intelligent offerings on the Data Intelligence Platform.Lakeflow Connect

Efficient data ingestion connectors from any source and native integration with the Data Intelligence Platform unlock easy access to analytics and AI, with unified governance.

Lakeflow Jobs

Easily define, manage and monitor multitask workflows for ETL, analytics and machine learning pipelines. With a wide range of supported task types, deep observability capabilities and high reliability, your data teams are empowered to better automate and orchestrate any pipeline and become more productive.

Lakehouse Storage

Unify the data in your lakehouse, across all formats and types, for all your analytics and AI workloads.

Unity Catalog

Seamlessly govern all your data assets with the industry’s only unified and open governance solution for data and AI, built into the Databricks Data Intelligence Platform.

The Data Intelligence Platform

Find out how the Databricks Data Intelligence Platform enables your data and AI workloads.

Take the next step

Spark Declarative Pipelines FAQ

Ready to become a data + AI company?

Take the first steps in your transformation